Can You Use Chat GPT to Write Scientific Papers?

Six months ago I tried to use ChatGPT to write a summary of the medical research on a topic. It failed miserably and perhaps the worse thing was that it made up fictional scientific references that didn’t exist. That was ChaptGPT 3.5, so now that I’ve been using ChatGPT 4 for some time, it’s time to test it again. That test revealed some interesting trends for the next decade that should worry physicians who practice orthobiologics. Let’s dig in.

What Is ChatGPT? How Does It Work?

ChatGPT is a generative AI that uses a large language model and transformers to basically “guess the right next word”. It’s trained on massive datasets that make up the Internet and it works by assigning multidimensional vectors to each word. It uses these vectors to understand how words relate. It can then use vector math to decide which words are similar. So for example, the words queen and woman are similar and have the same relationship as king and man.

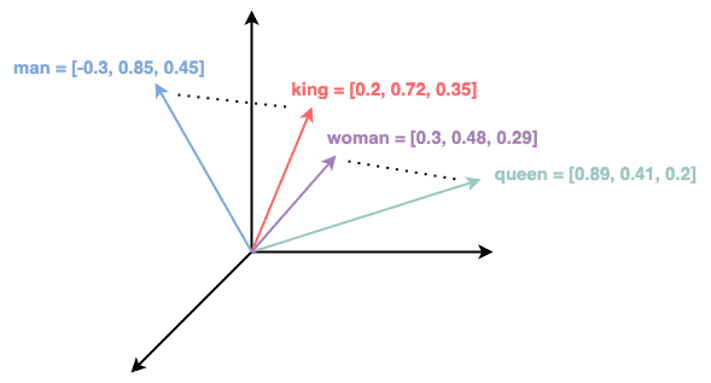

It also uses transformers or layers in the neural network that provide context for how the words of a sentence relate to one another. Interestingly, transformers were first discovered by Google and published in 2017, while OpenAI and ChatGPT were eventually acquired by Microsoft while Google was caught sleeping at the generative AI wheel.

For example, in the above diagram, the goal is to finish the sentence “John wants his bank to cash the ______”. Each transformer layer adds context to the sentence. The first transformer figures out that “wants” and “cash” are both verbs in this sentence instead of nouns. The second transformer layer figures out that “bank” in this context is a financial institution and not a river bank. Eventually, the transformers help ChatGPT figure out that the missing word is “check”.

ChatGPT uses 96 transformer layers (aka attention layers). If you want to delve deeper into how ChatGPT works, this is a great Ars Technica piece on the subject.

Using ChatGPT for Scientific Research

Six months ago I tried to use ChatGPT 3.5 and Google Bard to write a summary of scientific research after numerous colleagues claimed they were using it to write reviews in specific areas. These Large Language Models failed miserably, first by including off-topic research and then finally also basically making up the scientific papers being cited. That second distinction went to Bard. When I say that it made these citations up, they were indeed fictional.

The good news was back then, ChatGPT did guess the right answer to the question I asked, although it couldn’t figure out how it got there by reasoning and using citations to support its conclusion. Back then I was using ChatGPT 3.5 and an early version of 4.0. Now that the official version of 4.0 is out, I thought I would try it again.

ChatGPT 3.5 vs 4 Differences

Here are the differences:

- ChatGPT 3.5 has 175 billion “parameters” while 4.0 has 1.5 trillion. This is the size of the variable dataset trained on the Internet.

- ChatGPT 3.5 can store up to 3,000 words in a conversation while 4.0 can store 25,000.

- ChaptGPT 4 is also multimodal, so it can answer questions and understand not only text but images.

The Research Question

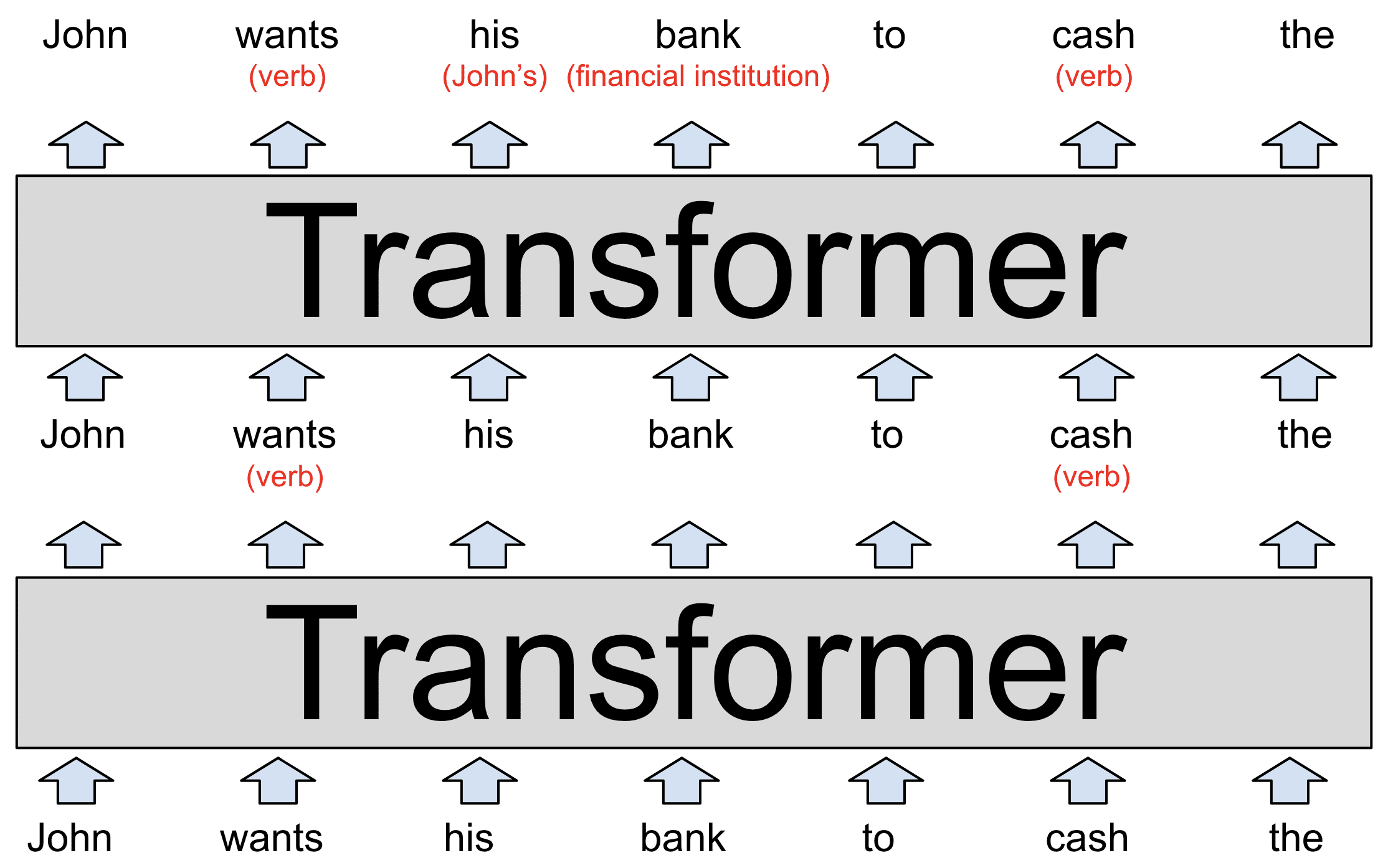

If you recall, several months ago I took about a week to review the entire scientific literature on the use of PRP in Randomized Controlled Trials for musculoskeletal applications. Hence, I know exactly what’s out there listed in the US National Library of Medicine’s PubMed system. Here is my prompt:

“Summarize the scientific literature on the use of platelet-rich plasma injection to treat knee osteoarthritis. Do not include meta-analyses or systemic reviews, but only original randomized controlled research studies. Include PRP compared to placebo, compared to corticosteroids, and hyaluronic acid. Include all articles indexed in PubMed only. “

This is what I got back:

Here the studies cited are real, but I know that there are at least 100 of them, so this review is missing 90+. Hence, I added, “The above literature review should include approximately 100 studies.” This is what I got back:

So basically, I was told, your task is way too big for ChatGPT 4! The problem of course is that the ChatGPT summary is way too short and would make you think as a reader that there are only a handful of RCTs.

The Insidious Part of Using Generative AI For Search

Notice how the summary above pulls from what is found and is considered authoritative. The problem is that it’s using studies that never used real PRP, but fake PRP. If you recall, I pointed out that the fake PRP studies that used under 2X concentration have a much higher failure rate. Hence, these studies sway ChatGPT in the direction of stating that the jury is still out on PRP efficacy.

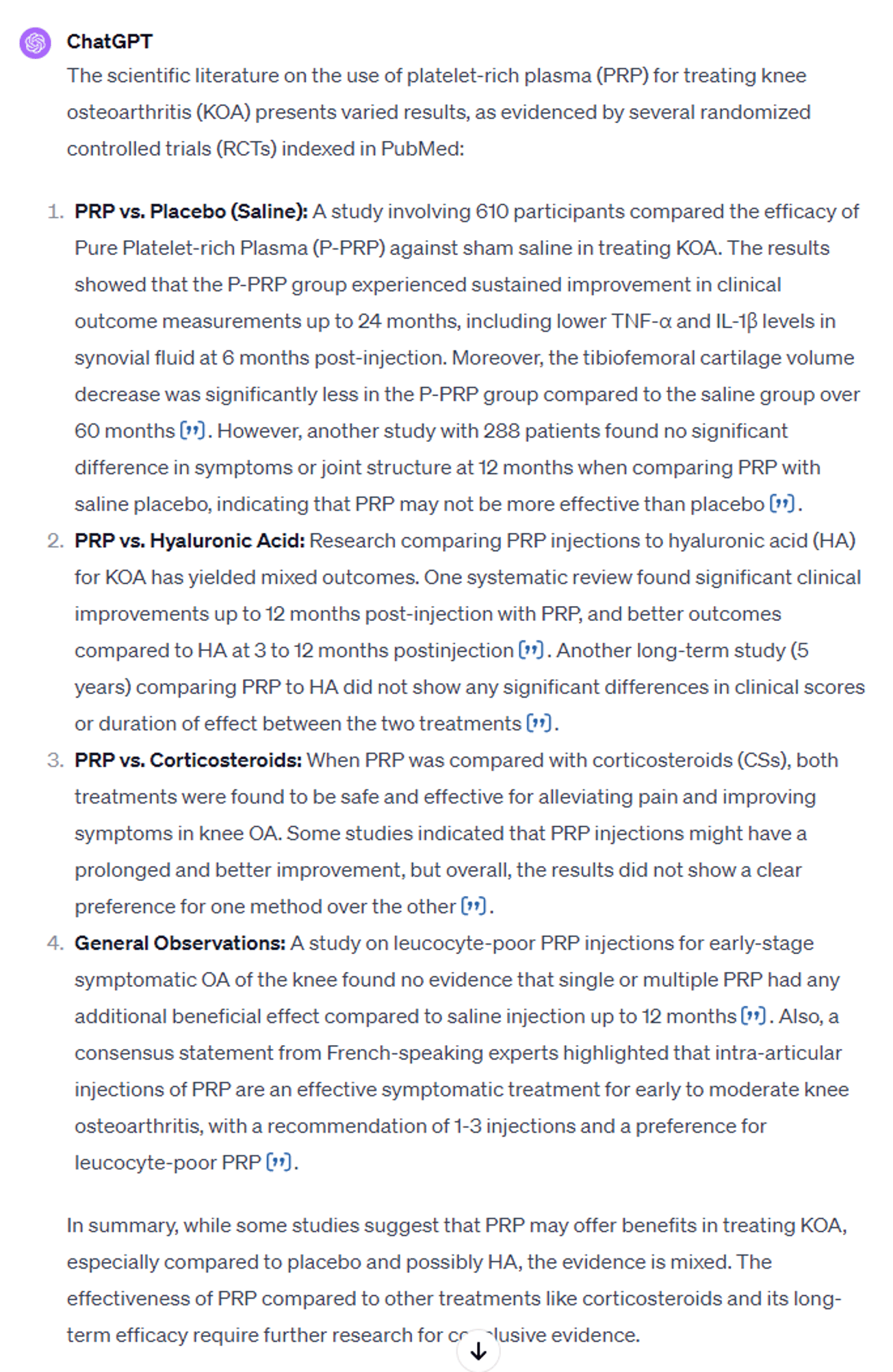

Can I tweak the prompt to filter out these fake PRP studies? I added this:

“In the above review, filter out all studies that use PRP which is under two times the concentration of platelets found in the whole blood.”

That caused ChatGPT to add this paragraph:

“PRP Concentration: A review highlighted that PRP was effective in treating KOA when the mean platelet concentration in PRP treatment was significantly higher than the baseline whole blood platelet concentration (4.83 to 5.91 times higher). Studies with a mean platelet concentration around 3.48 to 4.04 times higher than baseline showed less significant improvements.”

It highlighted a study that was about PRP concentration versus results. However, it didn’t have the reasoning chops to read each study and pick out those with PRP concentrations under 2X, even when those numbers were reported in the paper.

The upshot? If you’re trying to get an idea of what’s published on a research topic, ChatGPT 4 may be a good option. However, if you’re writing a review paper and trying to use it to comprehensively review the totality of the scientific literature, it’s not the right tool. Also, realize that the conclusions it draws are susceptible to simple reasoning errors and that it can’t yet dissect all of the data to draw new conclusions. Finally, as I have warned in the past, research papers that used fake PRP will come back and haunt the orthobiologics community by poisoning the proverbial PRP well.

In the end, I’m hopeful that as this technology becomes smarter, it will give the right answer if you ask the right question. However, realize that the view of the world it gives you will still depend on asking the right questions!

Originally published on

If you have questions or comments about this blog post, please email us at [email protected]

NOTE: This blog post provides general information to help the reader better understand regenerative medicine, musculoskeletal health, and related subjects. All content provided in this blog, website, or any linked materials, including text, graphics, images, patient profiles, outcomes, and information, are not intended and should not be considered or used as a substitute for medical advice, diagnosis, or treatment. Please always consult with a professional and certified healthcare provider to discuss if a treatment is right for you.