It Begins: How AI Will Make MRI MUCH More Valuable

Credit: Shutterstock

In the not-too-distant future, AI will change medicine and make radiologists extinct. In fact, several new research studies show that this AI imaging revolution is already quietly happening. Let’s dig in.

Current MRI Imaging is Held Back By Having a Human in the Equation

Today’s MRI begins with a patient being placed inside a superconducting magnetic ring which aligns all other protons in their tissue. All sorts of data are collected when the tissues are exposed to radiofrequency energy, causing all of the aligned protons to spin out of equilibrium. When the radiofrequency pulses are turned off, energy is emitted which is picked up by sensors. All of the information in that energy is then used to create a picture that makes sense to a human. It’s that last part of making pretty pictures that have held this technology back and that AI will now release into its full potential.

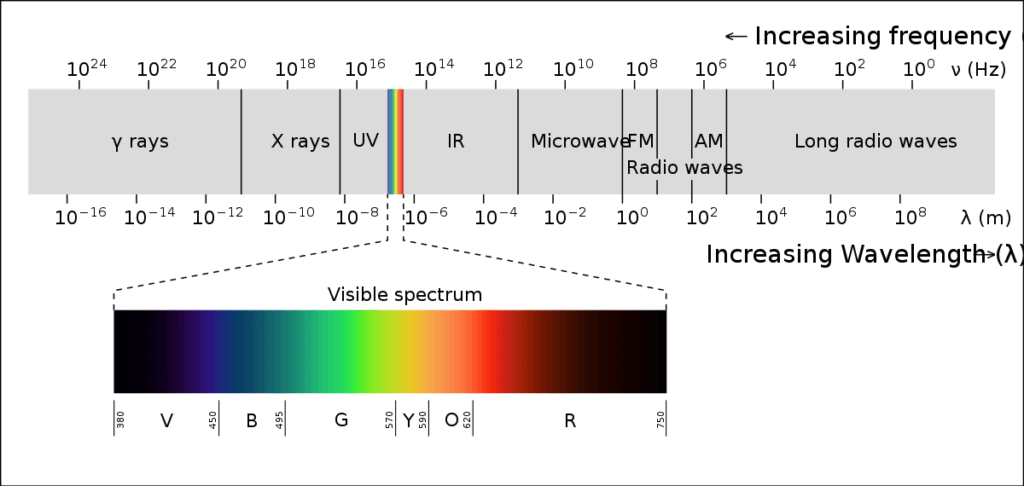

Let’s Think about MRI in Terms of our Human Eyes

Credit: Wikipedia Commons

Above is a diagram of the very limited visible spectrum our eyes can see. Notice that it’s just a tiny slice of all of the electromagnetic energy out there. Just imagine what the world would look like if your eyes could see UV light, x-rays, infrared, microwaves, and radio waves. For example, your phone would be bursting with activity because it’s constantly sending and receiving in the radio spectrum. Your doctor’s office would be glowing with intermittent bursts of x-rays. Your microwave would be glowing with energy while you heated up leftovers.

If you think about all of the data we miss in MRIs like all of the stuff we can’t see around us, you get an idea of how much we aren’t seeing because we have to create pictures that humans can read.

AI and Chaotic Data

Our human brains are very bad at seeing trends in data that look chaotic. A great example is a knee study we did that measured the levels of dozens of cytokines and growth factors in a knee to see which patients would respond best to a bone marrow concentrate procedure. When humans looked at the data, it was impossible to come up with any trends. In fact, it was all noise until we used a machine learning algorithm that could “see” that certain profiles of these chemicals were associated with better outcomes.

Examples of AI in Medicine

This trend is just beginning, but here are some early examples.

EKG

EKG is one of those areas in medicine that’s hard to learn. However, even the cardiologists who learn it can only find serious heart diseases. Now a machine learning program can analyze slight differences in the structures of the waveforms to accurately detect pre-diabetes and type 2 diabetes without a blood test with 97% accuracy (1). That’s something no human cardiologist can do.

Ultrasound

At a recent cardiology conference, an AI system out of Stanford was used to compete head to head with human ultrasonographers in a randomized controlled trial. The ultrasound images of the heart were used to calculate the ejection fraction and mixed in with human-marked scans and then double-checked by cardiologists. The AI system was much more accurate than humans (2).

MRI

A good MRI image of the knee can take 30 minutes in the scanner. Scientists at Stanford recently created an AI system that can take the data from 5 minutes in the scanner and provide a better image with better quantitative readings than a 30-minute MRI (3). Scientists at Johns Hopkins developed a similar fast MRI system using AI (5). Last year an AI system accurately read knee MRI images grading them for lesions that human radiologists used to read (4). Finally, UW researchers were able to teach an AI system to identify markers for heart disease while reading a knee MRI by looking at the size of the popliteal vessels (6).

The Next Steps in MRI

For the MRI systems above, you see incremental changes. A faster knee scan or systems that can help human radiologists get more out of the image more quickly. The next step is to no longer create the image and have the AI system “read” the trends in the raw MRI data and associate that with specific diagnoses or responses to treatment. For example, looking at the raw data to accurately predict who will need a knee replacement within 5 years. Or determining who will respond well to a specific surgery. Or figuring out who will respond well to PRP or a bone marrow concentrate injection. That’s when most radiologists will go extinct.

WNL

WNL in medicine is an abbreviation that means “Within Normal Limits”. So many patients in pain and with other spinal problems have been frustrated by human radiologists reading their MRIs of the spine as normal. I’ve blogged about the concept that these radiologists often miss findings like multifidus atrophy that we know aren’t normal findings. I suspect that in the next decade, as MRI AI systems gain the ability to read other raw data behind MRIs of the spine, this problem of “normal” scans and patients who have legit pain will go away. We’ll find lots of trends in that data that will allow AI systems to accurately tell who has pain and who does not.

The upshot? Medicine is about to be changed forever for the better. Many diagnostic studies that we humans can only take so far are about to yield an explosion of diagnostic information that we never imagined possible. That will extend to MRIs and the concept of normal imaging when a patient has pain will slowly go extinct. That’s a future that all of my patients can look forward to!

_________________________________________________

References:

(1) Kulkarni AR, Patel AA, Pipal KV, et alMachine-learning algorithm to non-invasively detect diabetes and pre-diabetes from electrocardiogramBMJ Innovations Published Online First: 09 August 2022. doi: 10.1136/bmjinnov-2021-000759

(2) Fierce Biotech. ESC 2022: Ultrasound AI outperforms human clinicians in randomized, blinded study. https://www.fiercebiotech.com/medtech/esc-2022-ultrasound-ai-outperforms-human-clinicians-randomized-blinded-real-world-study?utm_source=email&utm_medium=email&utm_campaign=LS-NL-FierceMedTech. Accessed 8/30/22.

(3) Chaudhari AS, Grissom MJ, Fang Z, Sveinsson B, Lee JH, Gold GE, Hargreaves BA, Stevens KJ. Diagnostic accuracy of quantitative multi-contrast 5-minute knee MRI using prospective artificial intelligence image quality enhancement. AJR Am J Roentgenol. 2020 Aug 5. doi: 10.2214/AJR.20.24172.

(4) Astuto B, Flament I, K Namiri N, Shah R, Bharadwaj U, M Link T, D Bucknor M, Pedoia V, Majumdar S. Automatic Deep Learning-assisted Detection and Grading of Abnormalities in Knee MRI Studies. Radiol Artif Intell. 2021 Jan 20;3(3):e200165. doi: 10.1148/ryai.2021200165. Erratum in: Radiol Artif Intell. 2021 May 19;3(3):e219001. PMID: 34142088; PMCID: PMC8166108.

(5) Fayad LM, Parekh VS, de Castro Luna R, Ko CC, Tank D, Fritz J, Ahlawat S, Jacobs MA. A Deep Learning System for Synthetic Knee Magnetic Resonance Imaging: Is Artificial Intelligence-Based Fat-Suppressed Imaging Feasible? Invest Radiol. 2021 Jun 1;56(6):357-368. doi: 10.1097/RLI.0000000000000751. PMID: 33350717; PMCID: PMC8087629.

(6) Chen, L, Canton, G, Liu, W, et al. Fully automated and robust analysis technique for popliteal artery vessel wall evaluation (FRAPPE) using neural network models from standardized knee MRI. Magn Reson Med. 2020; 84: 2147– 2160. https://doi.org/10.1002/mrm.28237

NOTE: This blog post provides general information to help the reader better understand regenerative medicine, musculoskeletal health, and related subjects. All content provided in this blog, website, or any linked materials, including text, graphics, images, patient profiles, outcomes, and information, are not intended and should not be considered or used as a substitute for medical advice, diagnosis, or treatment. Please always consult with a professional and certified healthcare provider to discuss if a treatment is right for you.